For years, removing background noise from audio meant trade-offs. You either reduced noise and damaged the voice, or preserved speech and lived with distortion. That limitation wasn’t a UX problem—it was a core technical constraint.

What changed recently isn’t just better tools, but a shift in how machines understand sound itself. To understand the best way to remove background noise from audio today, we need to look at what’s happening under the hood.

Why Traditional Noise Reduction Always Fell Short

Classic audio noise reduction relied on signal subtraction.

The workflow looked like this:

- Identify a noise profile

- Subtract that profile from the full signal

- Apply smoothing to reduce artifacts

Technically, this approach assumes:

- Noise is stationary

- Speech and noise occupy separate frequency bands

In real-world recordings, neither is true.

Human speech overlaps heavily with:

- HVAC hum

- Traffic noise

- Crowd ambience

- Room echo

As a result, traditional filters often removed parts of the voice itself. From a signal-processing standpoint, this was unavoidable.

This is why older tools never represented the best way to remove background noise from audio—only the least damaging compromise.

The Key Breakthrough: Source Separation Instead of Noise Removal

Modern AI tools don’t “remove noise” in the classical sense.

They separate sound sources.

Instead of asking:

“What frequencies should I delete?”

AI asks:

“Which parts of this signal belong to human speech?”

This is a fundamental shift.

Using deep neural networks trained on massive datasets, modern systems learn:

- Temporal speech patterns

- Harmonic structures unique to voices

- Contextual cues across time

Noise is no longer filtered out—it’s ignored during reconstruction.

This is the foundation of the best way to remove background noise from audio today.

How Modern AI Noise Reduction Actually Works

At a high level, the pipeline looks like this:

- Time-Frequency Decomposition

Audio is transformed into a spectrogram using STFT or similar techniques. - Neural Mask Prediction

The model predicts a probability mask that identifies speech-dominant regions. - Voice Reconstruction

Only voice-relevant components are reconstructed into the output signal. - Post-Processing Optimization

Minor smoothing preserves natural timbre and avoids metallic artifacts.

What matters here is that noise is never explicitly removed.

Speech is rebuilt independently.

This approach defines the current best way to remove background noise from audio, especially for spoken content.

Why AI Outperforms Manual Editing at Scale

Manual editing tools offer control, but control does not equal intelligence.

Human editors:

- Work locally in time

- Apply static rules

- Cannot model speech semantics

AI models, by contrast:

- Analyze entire recordings holistically

- Adapt dynamically to different speakers

- Generalize across environments

This is why AI-based systems consistently outperform manual workflows when users want to remove background noise from audio online free, quickly, and reliably.

Real-World Constraints That Matter More Than Raw Accuracy

From a technical standpoint, noise removal is not just about decibel reduction.

The real challenges are:

- Preserving consonant clarity

- Maintaining vowel warmth

- Avoiding phase distortion

- Keeping speech intelligibility intact

Many tools reduce noise aggressively but fail here.

The best way to remove background noise from audio must optimize perceptual quality, not just signal metrics.

This is where modern AI pipelines—like the one used by DeVoice—gain an edge by optimizing for how humans actually perceive sound.

Latency, Scalability, and Online Processing

Another overlooked factor is processing architecture.

Older tools:

- Require local compute

- Scale poorly

- Depend on device performance

Modern online systems:

- Use GPU-accelerated inference

- Scale elastically

- Deliver consistent output

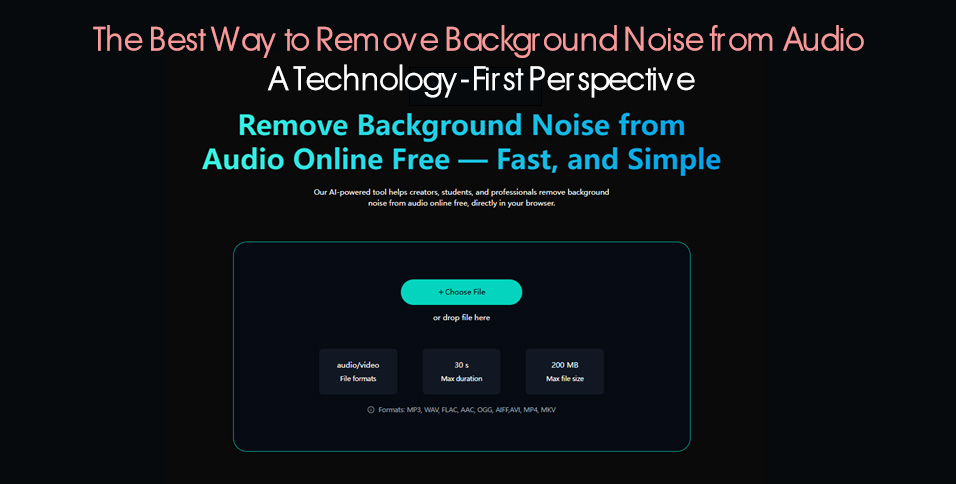

This makes it realistic to remove background noise from audio online free without compromising quality or speed.

From a systems perspective, this shift is as important as the model itself.

Why DeVoice Reflects This New Technical Standard

Without turning this into a feature list, it’s worth noting why DeVoice consistently performs well in technical comparisons.

Its pipeline:

- Focuses on speech-first reconstruction

- Avoids destructive frequency filtering

- Optimizes for real-world recordings, not studio samples

That alignment with modern source-separation theory is why many users experience it as the best way to remove background noise from audio, especially in uncontrolled environments.

The Future: Beyond Noise Removal

Noise reduction is becoming a solved problem—but it’s also evolving.

Next-generation systems are moving toward:

- Speaker-aware enhancement

- Context-aware reconstruction

- Emotion-preserving voice modeling

In that sense, removing background noise from audio is no longer an isolated task. It’s part of a broader shift toward semantic audio understanding.

Final Thoughts

If you’re evaluating tools today, don’t just ask what they remove—ask how they think.

The best way to remove background noise from audio isn’t about stronger filters or more knobs. It’s about whether the system understands speech as a structured signal, not just a waveform.

That technical shift is what finally made clean audio accessible to everyone—not just engineers.