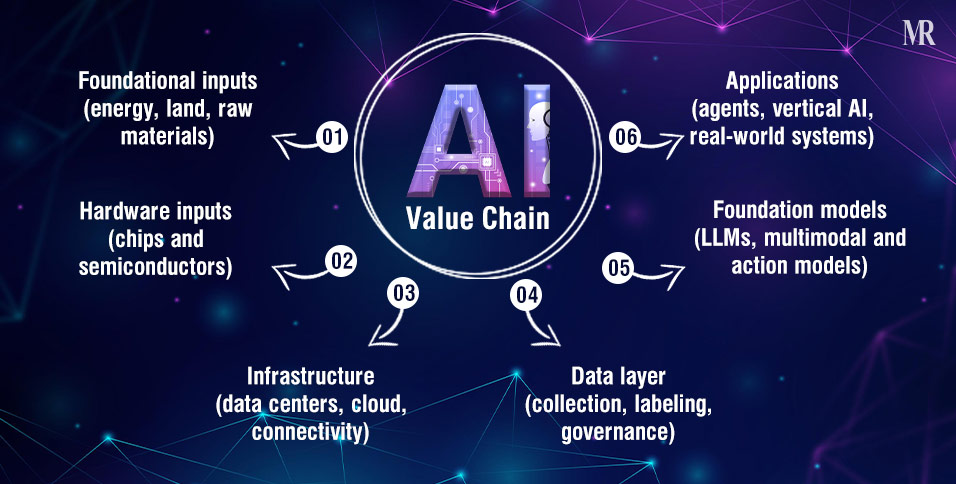

Artificial intelligence is no longer just a software story. It is a physical, financial, and geopolitical system that stretches from power grids and silicon mines to data centers, models, and enterprise workflows.

This interconnected system is known as the AI value chain.

Understanding the artificial intelligence value chain is no longer optional for governments, investors, founders, or business leaders. It explains where value is created, where it concentrates, where bottlenecks form, and who ultimately captures economic power in the intelligent economy.

Unlike past technology cycles, AI does not scale on code alone. It scales on energy, land, compute, capital, and trust. That is why today’s AI race is as much about infrastructure and governance as it is about algorithms.

This guide breaks down the six core layers of the AI value chain, explains how they interact, and shows how intelligence moves from raw resources to real-world impact.

What Is the AI Value Chain?

The AI value chain describes the full lifecycle of artificial intelligence, from physical inputs to deployed applications, showing how intelligence is created, scaled, and monetized.

At a high level, the artificial intelligence value chain includes:

- Foundational inputs (energy, land, raw materials)

- Hardware inputs (chips and semiconductors)

- Infrastructure (data centers, cloud, connectivity)

- Data layer (collection, labeling, governance)

- Foundation models (LLMs, multimodal and action models)

- Applications (agents, vertical AI, real-world systems)

Each layer depends on the previous one. Weakness at any point reduces the value of the entire chain.

This dependency explains why AI leadership is no longer determined by model quality alone, but by the ability to coordinate the full AI stack.

Now, let’s look at each layer of the AI value chain in detail.

The 6 Core Layers of The AI Value Chain are as Follows

1. Foundational Inputs: The Physical Constraints

The AI value chain begins at the most basic physical level: the provisioning of land, energy, and raw materials.

Intelligence is essentially a physical commodity requiring massive resource inputs. Since 2010, this layer has attracted over $100 billion in direct investment, primarily to power massive data centers.

- Energy as the Ultimate Limiter: AI workloads are the fastest-growing component of global electricity demand, now accounting for 1-2% of global electricity. A single hyperscale data center can require 300–500 megawatts. This is equivalent to the demand of a mid-sized city.

- Land and “Physical Readiness”: Capital is abundant, but grid-connected land is scarce. In 2024, 87% of US data center projects faced delays due to power or land constraints, with grid interconnection timelines stretching to 7–10 years.

- Geopolitics of Raw Materials: Strategic exposure now includes the supply chains for silicon, copper, aluminum, and rare earth elements necessary for both chips and the emerging “Physical AI” (robotics) layer.

2. Hardware Inputs: The Semiconductor Frontier

The hardware layer converts foundational inputs into raw processing power. This segment of the AI technology stack includes designing specialized chips. Since 2010, hardware investments have surpassed $200 billion. It is defined by extreme geographical and corporate concentration.

- The Foundry Monopoly: Only four semiconductor companies (TSMC, Samsung, SMIC, UMC) drive 90% of global foundry revenue.

- The Rise of Custom Silicon (ASICs): To bypass NVIDIA’s 75% margins and supply bottlenecks, hyperscalers are deploying internal chips like Google’s TPU, Amazon’s Trainium, and Meta’s MTIA.

- New Bottlenecks: Scarcity has shifted from raw chips to Advanced Packaging (CoWoS) and High-Bandwidth Memory (HBM), which can account for up to 40% of a chip’s total cost.

3. AI Infrastructure: The Financial & Physical Model

The AI infrastructure layer represents the facilities hosting intelligence. It includes data centers, connectivity networks, and cloud platforms. This is where capital intensity is highest and “Digital Sovereignty” is won or lost. This segment is expanding rapidly, with the US currently holding over 40% of installed global capacity.

- Internalized Stacks: Leading tech firms are “internalizing” infrastructure to control costs and deployment. Owning the physical data center reduces long-term inference costs and removes cloud dependency.

- The “Neocloud” Phenomenon: Specialized GPU-cloud providers have emerged to serve AI-intensive workloads with faster deployment than traditional hyperscalers. Providers like CoreWeave have disrupted legacy cloud by offering 100% GPU-dedicated environments with faster deployment cycles.

- Sovereign Clouds: National initiatives (especially in Europe and the Middle East) focus on keeping critical workloads under local governance to ensure data security and regulatory compliance.

- Meta Compute: Meta’s massive “Meta Compute” initiative is a prime example of an “internalized stack,” aimed at controlling the full physical and financial cycle of intelligence. Newly elected Meta president Dina Powell McCormick guides Meta’s strategy for scaling personal superintelligence.

4. The Data Layer: The Quality Control Checkpoint

If infrastructure is the body, data is the bloodstream. The data layer involves the acquisition, cleaning, and labeling of information. Performance and trust are now directly tied to data governance rather than just volume. Therefore, cumulative investment in data solutions is expected to exceed $90 billion annually by 2030.

- Governance as a Moat: In 2026, leadership is defined by provenance and lineage tracking. Metadata management and role-based access are now treated as essential infrastructure, not just compliance overhead.

- The Cost of Inference: Every AI execution (inference) incurs a variable cost. Startups must model these costs accurately; for example, high-intensity LLM interactions can cost hundreds of dollars per client daily in compute burn.

- Data Freshness: The value of a model is increasingly tied to its access to real-time, high-quality proprietary data rather than stale public crawls.

5. AI Models & Algorithms Layer: The General Logic Layer

Foundation models provide the general-purpose reasoning enabling applications. Investment in this layer grows by 25% to 35% annually. We are currently seeing a shift from “Brain Building” to Functional Specialization.

- Model Convergence: Raw reasoning capabilities are becoming commoditized. As models reach parity, the competitive moat shifts from the base model to how it is fine-tuned for specific industry needs.

- Large Action Models (LAMs): AI is moving from “thinking” to “doing.” LAMs can interact directly with software APIs to execute tasks, closing the gap between a prompt and a completed business process.

- Small Language Models (SLMs): For 90% of enterprise use cases, SLMs are gaining traction. They are cheaper to run, easier to secure, and can be deployed at the “Edge” (on-device) for real-time applications.

6. Applications: The Frontlines of Value Capture

This is where the AI value chain delivers tangible results, with annual investment here potentially reaching $1.5 trillion by 2030. Strategic value has shifted from the “Model Makers” to the “Vertical AI” providers who solve the “last mile” problem.

- Agentic AI Platforms: 2026 is the year of autonomous agents. These are systems that plan and execute multi-step tasks across functions like legal discovery, fraud detection, and supply chain routing.

- Physical AI & Robotics: Intelligence is moving into humanoid robots and smart infrastructure. Power grids and manufacturing floors now feature embedded “Edge AI” that perceives and acts on the physical environment.

- The “Outcome-Based” Pivot: To survive high inference costs, application providers are moving away from subscriptions toward Outcome-Based Pricing, charging based on the actual value created (e.g., “Price per Fraud Prevented”).

| Application Domain | Key AI Use Case | Real-World Impact |

| Healthcare | Ambient Documentation | 30% reduction in clinician burnout |

| Finance | Real-Time Credit Scoring | Instant loan approvals with 99% accuracy |

| Logistics | Autonomous Routing | 10-15% fuel efficiency gains |

| Legal | Legal Discovery | Increase in conversion rates |

MLOps: The Operational Backbone of the AI Value Chain

Managing the AI value chain at scale requires robust MLOps (Machine Learning Operations) platforms to provide trust, control, and operational resilience across the entire AI development lifecycle.

In 2026, MLOps is no longer just a technical choice but a strategic necessity for financial sustainability and governance. These platforms unify data engineering and machine learning to manage “Compound AI Systems” effectively.

- Lifecycle Management: Platforms like AWS SageMaker and Databricks Mosaic AI unify the stack from data ingestion to model monitoring.

- Trust and Governance: With regulations like the EU AI Act, MLOps tools now feature automated bias evaluation and end-to-end audit trails.

- Operational Moats: Provenance, explainability, and observability (e.g., Arize Phoenix) are becoming the primary moats for enterprise AI adoption.

AI Sovereignty: Pathways to Global Competitiveness

AI Sovereignty refers to an economy’s ability to shape and govern its AI ecosystem according to its own values while ensuring strategic control and resilience.

Success in the “intelligent age” depends on strategic interdependence, balancing localized investment with trusted international collaboration, rather than impractical, rigid self-sufficiency.

The Five Pathways to AI Ecosystem Success

The World Economic Forum identifies five archetypes based on an economy’s starting point and coverage across the AI value chain:

| Pathway | Archetype | Strategic Approach | Example |

| 1 | Ecosystem Builders | Balanced investments across most elements; high public-private coordination. | Singapore: Maintains control over critical workloads while using global cloud partners. |

| 2 | Adoption Accelerators | Leverage large talent bases and digital public infrastructure to drive rapid commercial deployment. | India: Uses its massive workforce and open data approach to drive global analytics leadership. |

| 3 | Selective Players | Focus on industrial niches (e.g., manufacturing, mobility) where they have a comparative advantage. | Established industrial economies focusing on vertical AI. |

| 4 | Global Leaders | End-to-end investors across all layers, including hardware and frontier models. | US and China, capturing 65% of aggregate global investment. |

| 5 | Emerging Collaborators | Harness energy and land; use international partnerships to jumpstart nascent capabilities. | Economies focusing on “carbon-aware” data centers and regional hubs. |

How Value Is Created and Captured in the AI Value Chain

AI monetization models are evolving to reflect high variable costs. Usage-based pricing has gained significant momentum. This model ensures that overheads are covered by billing based on tokens or executions. Furthermore, blended subscription-plus-consumption models are becoming more prevalent.

Margins vary significantly across the generative AI value chain. Hyperscalers possess fortress balance sheets. However, AI labs face a massive timing gap between funding and returns. OpenAI and Anthropic reportedly operate with gross margins between 50% and 60%. In contrast, server assembly manufacturers see margins as low as 3% to 4%.

The AI Value Chain by Stakeholder Perspective

- What the AI Value Chain Means for Businesses

Enterprises must choose between building or buying AI solutions. Building internally requires a mature data platform and strong ML engineering teams. Conversely, buying off-the-shelf tools offers faster time-to-value. Successful businesses align their AI strategy with clear business objectives.

- Opportunities for AI Startups

Startups should focus on underserved layers. Differentiation comes from solving the “Last Mile” problem. By automating high-cost, language-intensive tasks, startups compete for labor budgets rather than IT budgets. This creates a massive economic unlock in legacy industries.

- What Investors Look for in the AI Value Chain

Investors prioritize “bottleneck assets” and scalability. They favor sectors where adoption is already visible. Capital-intensive sectors like hardware require massive upfront investment. In contrast, application-layer startups offer faster scalability through higher net dollar retention.

Conclusion

The AI value chain makes one thing clear: sustainable advantage in AI does not come from any single breakthrough, model, or investment.

It comes from how well the entire chain is understood, aligned, and executed.

This includes physical constraints and infrastructure decisions to data governance, deployment economics, and last-mile applications.

As AI moves from experimentation to execution, winners will be those who identify their position in the chain, invest in bottleneck assets, and design systems that balance scale, cost, and trust.

The shift is already underway, from building intelligence to operationalizing it responsibly and profitably.

If you are a founder, enterprise leader, investor, or policymaker, now is the time to map where you sit in the AI value chain, and where you should play next.

Maria Isabel Rodrigues

FAQs

- What is an example of an AI value chain?

An AI value chain example includes a mining company providing silicon (Foundational Input), NVIDIA designing a chip (Hardware), AWS hosting it (Infrastructure), a team cleaning data (Data Layer), OpenAI training a model (Foundation Model), and a legal firm using a specialized research bot (Application).

- Who are the key players in the AI value chain?

The key players across the AI value chain include resource and energy providers for foundational inputs, semiconductor giants like TSMC and NVIDIA for hardware, hyperscalers such as Meta, Microsoft, and Alphabet for infrastructure, and specialized firms like OpenAI, Anthropic, and CoreWeave that drive the development of models and applications.

- Which layer of the AI value chain is most profitable?

Currently, the hardware layer (chip design) and foundation model providers capture significant value. However, value is shifting toward vertical applications that automate high-cost labor tasks.

- How does the Generative AI value chain differ from traditional AI?

The generative AI value chain shifts the focus toward inference costs and multimodal capabilities. It moves the AI ecosystem from simple automation to autonomous agents that can plan and execute complex workflows.

- What skills are needed across the AI value chain?

Essential skills required across the AI value chain include AI engineering, data stewardship, MLOps orchestration, and prompt design. Leaders also need expertise in AI governance and ethical compliance.