Rampiq’s Liudmila Kiseleva on Revolutionizing B2B IT and SaaS Marketing in 2024

Continue Reading

Nanoprecise Sci Corp: Transforming Predictive Maintenance with AI and IIoT

Continue Reading

DreamOnTech’s Joël Guéguen on Pioneering a Global Tech Revolution: From Start-Up to MMA Leader

Continue Reading

Reshma Saujani: The Woman Who Dares to Dream Big and Change the World of Opportunities

Continue Reading

Michael Park: Orchestrating Growth and Transformation in Marketing Renaissance with Optimism and Empathy

Continue ReadingLiudmila Kiseleva, a highly skilled online marketer, has helped B2B

Predictive maintenance is a key component of Industry 4.0, the

Joël Guéguen is a serial entrepreneur and innovator who has

Reshma Saujani is a prominent activist and the founder and

Recent Posts

Articles

The Iconic Revolution of Custom Chinese Takeout Boxes

Craving for Chinese food? The specific aroma, the salivating looks, and the fact that it’s light on the stomach, are enough to win the heart of all foodies out there. But what makes these food items look even more tempting to eyes? Unveiling the SECRET here, it is the packaging. At times, people don’t

Predictive Maintenance: A Detailed Guide for Business People

Can You Afford Not to Have Commercial Insurance? Find Out Now!

An In-Depth Guide to Craft Your Career in Data Science

Editors' Choice

Business Legacy Created by Jute Baron Ghanshyam Sarda

Leadership, vision, and creating a positive impact in Jute Industry Ghanshyam Sarda, a renowned Industrialist and

Aleksandr Kretov’s Holistic Business Approach

Kretov Aleksandr, shareholder at the Ariant Group of Companies, has spent over 25 years in

The Role of Continuous Emissions Monitoring Systems in Achieving Sustainable Industrial Practices

In an era where environmental sustainability is a top priority, industries face increasing pressure to

How to Organize a Warehouse: The Best Tips for Businesses

Did you know that 59% of warehouses report that they utilize more than 90% of their space? Whether

Franchisors' Corner

Veronica’s Insurance: Providing Proliferative Franchising Strategies

Over the years, franchise businesses have undergone significant changes. Initially, most of the businesses relied on traditional business

Time to Eat Delivery: Delivering Happiness with Ace Customer Service

Over the last decade, peoples’ lives have shifted from a physical dimension to a digital world, where a

Floor Coverings International®: Trailblazing the Custom Flooring Industry

The flooring industry is one of the fastest-growing industries in American due to factors such as increased construction

Fingernails2Go: Revolutionizing the Nail Art Industry

In the last few years, technology has played a vital role in revolutionizing industries across the globe. The

Fantastic Services: The One-stop Franchise for all Home Services

Today, collaboration in business is essential. Due to numerous advantages provided by the emerging technologies—getting employees, franchisees, and

Solatube Home: Pioneer in Daylighting and Ventilation Franchising

Today, with a nationwide shortage of housing and record-high home prices, a majority of homeowners in the U.S.

Subscribe To Our Newsletter

Join the community of more than 80,000+ informed professionals

Trending Videos

Industry News

How to Prepare for a Career in the Exciting World of Ecommerce Customer Support

Do you love working with people and solving problems? If you’re good at communication and can

How a Leadership and Management Test Elevates Pre-Employment Evaluation

Have you ever wondered how to pick the best leaders for your team? A leadership and

The Role of Advanced Payment Solutions in Digital Ordering Systems

In the rapidly evolving landscape of digital commerce, advanced payment solutions play a pivotal role in

Decoding the Mystery: Auto Transport Brokers and How They Streamline Your Vehicle’s Journey

In today’s dynamic world, relocations and vehicle purchases often transcend geographical boundaries. Whether you’re relocating across

Top 12 Crypto Exchanges and Trading Apps of April 2024

In the fast-paced world of cryptocurrencies, having access to the best platforms as well as apps

The Modern Group: Australia’s Leading Home Improvement Solutions Provider

When making improvements on your home, selecting the best products for your project is crucial. After

The Complete Guide to Planning a Home Improvement Project

Embarking on a home improvement project can be an exhilarating endeavor, especially in sunny Florida where

Exploring the Benefits of Harver Assessments in Modern Recruitment

To make better hiring choices, companies are now integrating external pre-employment evaluation tools in their recruitment

Exclusive Interviews

Mirror Coverage

Competitive Intelligence: What It Is & Why Your Business Needs It

Are you considering competitive intelligence for your business? Incorporating it can enhance your market position

The Evolution of Expense Management Software: Trends to Watch

In today’s fast-paced business landscape, keeping track of expenses is crucial for maintaining financial health

Safety and Maintenance Tips for Long-Lasting Commercial Signage

Maintaining signage is essential for any business wanting to create a lasting and impactful presence.

The Role of AI and Machine Learning in Metrology Software Development

Over the years, the rapid growth of artificial intelligence (AI) and machine learning (ML) has

Mirror Education

How to Prepare for a Career in the Exciting World of Ecommerce Customer Support

Do you love working with people and solving problems? If you’re good at communication and

How a Leadership and Management Test Elevates Pre-Employment Evaluation

Have you ever wondered how to pick the best leaders for your team? A leadership

Exploring the Benefits of Harver Assessments in Modern Recruitment

To make better hiring choices, companies are now integrating external pre-employment evaluation tools in their

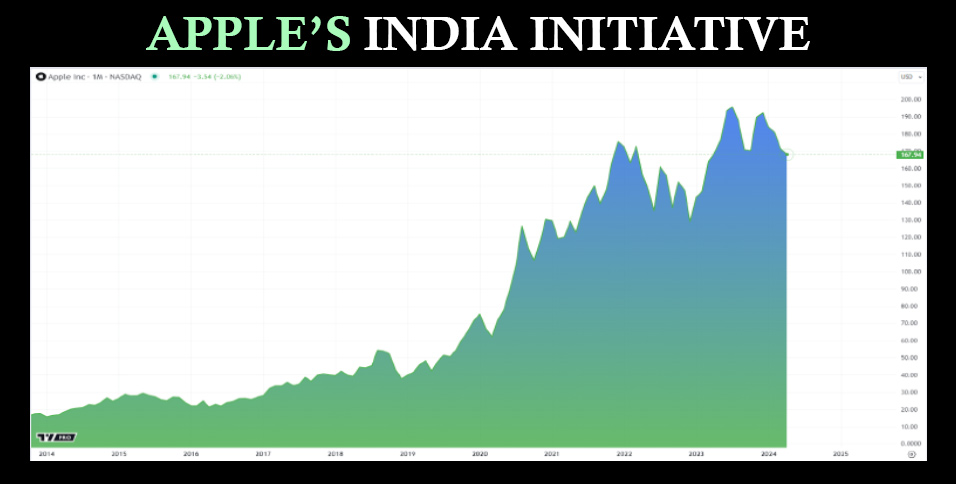

Apple’s India Initiative

Diversifying production is an essential part of any global company’s strategy, and Apple is no

Analyzing the Impact of Online Learning Platforms on Plagiarism Trends in Higher Education

Online learning platforms are the next modern trend in higher education and are extremely popular

9 Ways to Utilize Machine Learning Algorithms in Compensation Claims

In today’s fast-paced corporate world, technology and integrity are crucial to fair procedures and fraud

Women's ERA

Catherine Vlaeminck: Empowering a Tech Leader to Disrupt an Industry

In today’s world driven by data and the need for security, data storage and cyber

Teresa Barreira: Empowering the Leaders of Tomorrow

As I glance through the headlines of Kamala Harris making history as first female Vice

Selena Proctor: Shattering The Glass Ceiling For The Women Leaders Of Tomorrow

We all are aware of the women contributors of Marie Curie to Lady Ada Lovelace

Monica Eaton-Cardone: A Trailblazer inspiring the Next Generation of Female Leaders

The role of women in leadership positions has significantly changed over the years. Women have

Expert Views

Managing Distractions That Threaten Your Focus

In the fast-paced, high-stakes world of business leadership, maintaining focus is paramount. Executives and business

Will Casinos Still Hold Their Top Spot in the Gaming Industry in 2023?

As long as the online casino operators keep things fresh for players, there’s no reason

Pedestrian Laws: Walking Is Good but May Turn Risky Soon Without Road Safety Awareness!

Everyone promotes walking for better health and a better environment. But like everything else, it

Football Competitions with the Highest Prize Money

Sports has evolved into one of the most profitable and lucrative businesses in the 21st